Video

About

LabelFusion is a pipeline to rapidly generate high quality RGBD data with pixelwise labels and object poses, developed by the Robot Locomotion Group at MIT CSAIL.

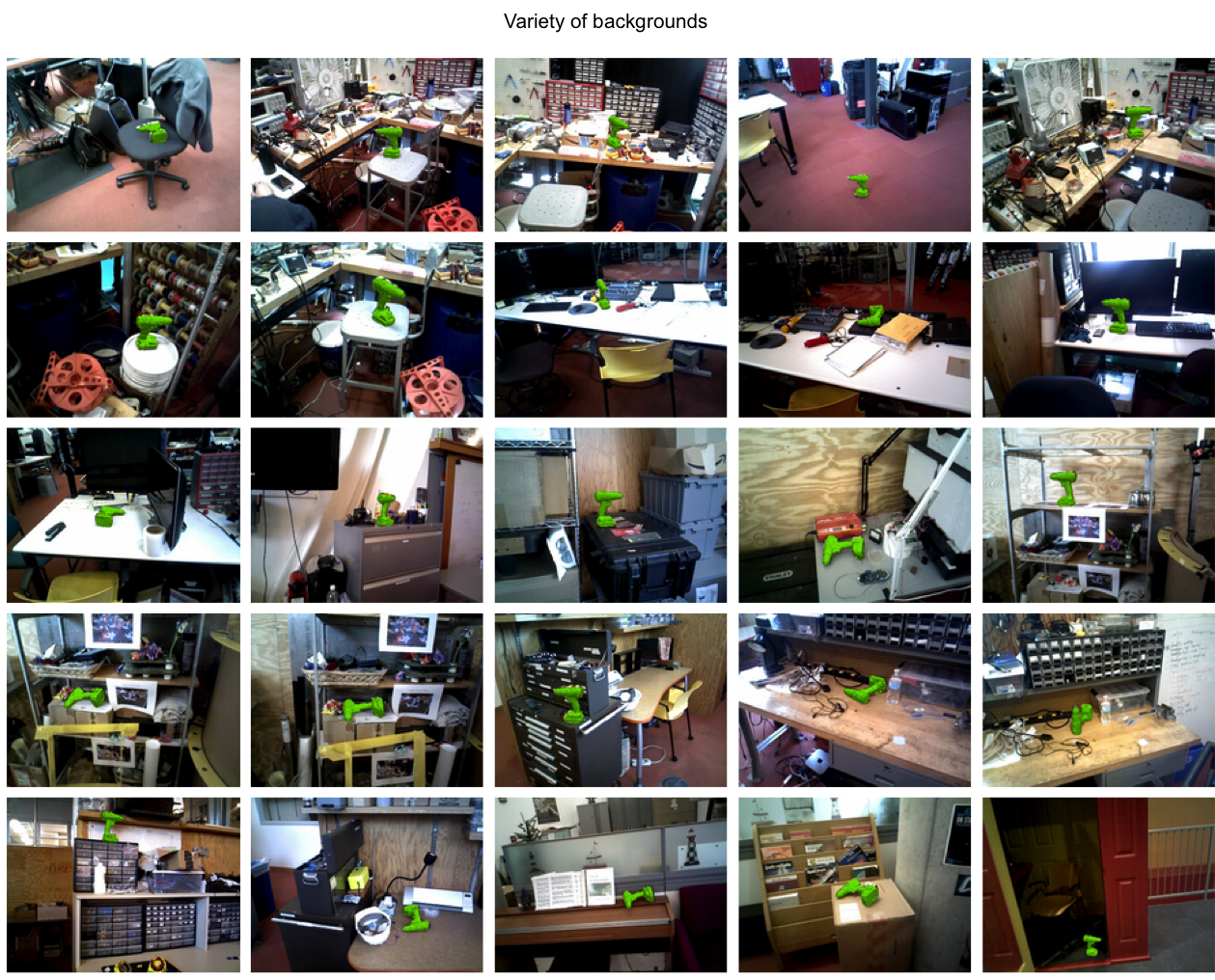

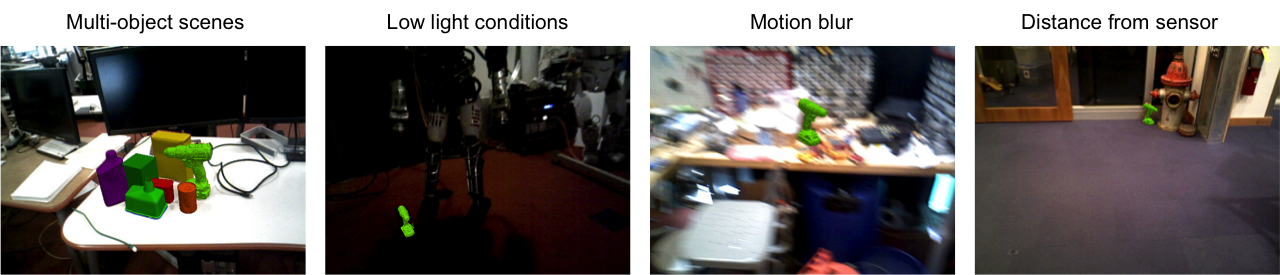

We used this pipeline to generate over 1,000,000 labeled object instances in multi-object scenes, with only a few days of data collection and without using any crowd sourcing platforms for human annotation.

Our goal is to enable researchers and practitioners to generate customized datasets, which for example can be used to train any of the available state-of-the-art image segmentation neural network architectures.

You can get started here generating your own dataset for your own object.

Example Data

The images and videos below show some example labeled data generated using LabelFusion.

Technical Description

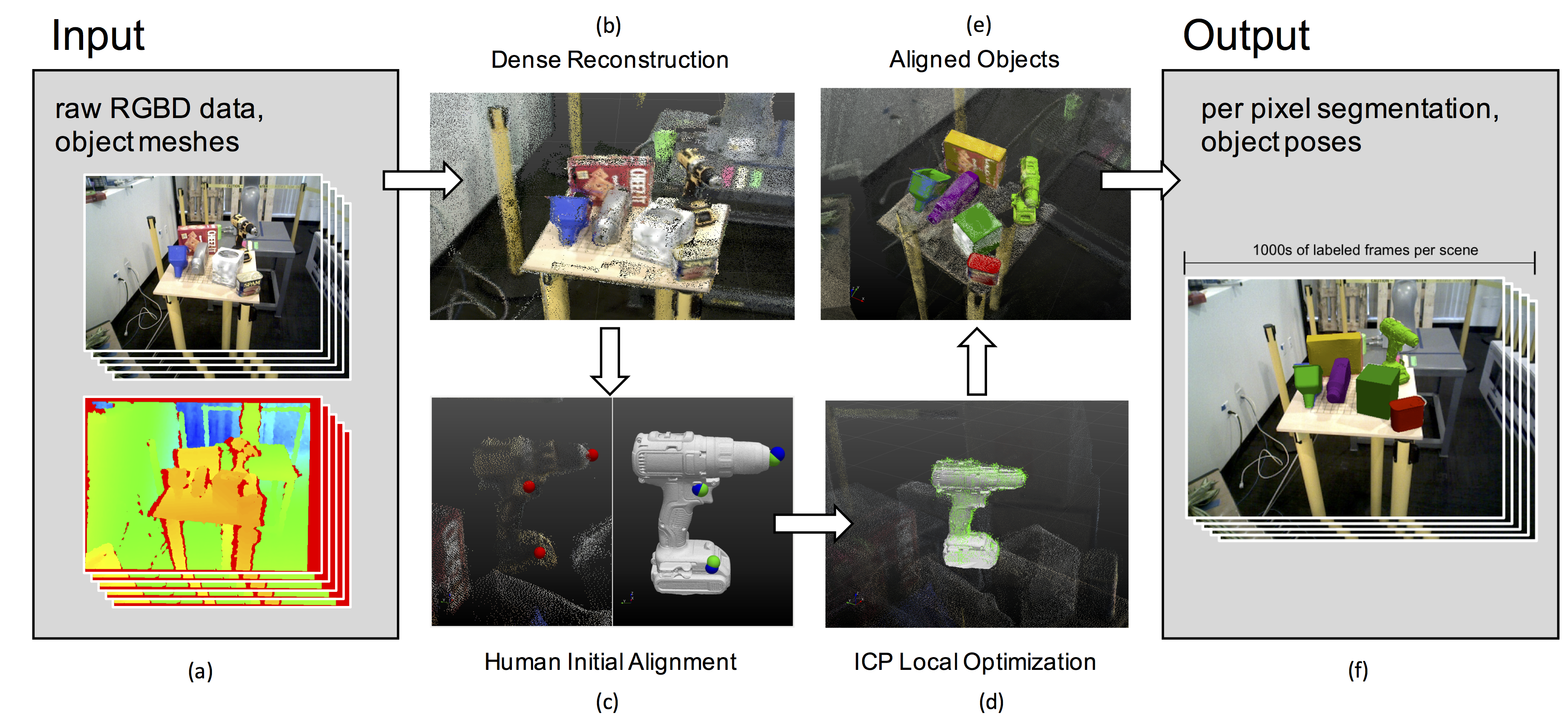

LabelFusion produces ground truth segmentations and ground truth 6DOF poses for multiple objects in scenes with clutter, occlusions, and varied lighting conditions. The key components of the pipeline are: leveraging dense RGBD reconstruction to fuse together RGBD images taken from a variety of viewpoints, labeling with ICP-assisted fitting of object meshes, and automatically rendering labels using projected object meshes. Raw data can be collected either using an automated robot arm, or simply hand-carrying the RGBD sensor. For the dense reconstruction, we use ElasticFusion.1 For the 3D human annotation step, we implemented an application on top of Director,2 a user interface and visualization framework. After the human annotation step, the rest of the pipeline is automated, and uses the aligned 3D object meshes to generate per-pixel object labels by projecting back into the 2D RGB images.

1 Whelan, Thomas, et al. "ElasticFusion: Real-time dense SLAM and light source estimation." The International Journal of Robotics Research 35.14 (2016): 1697-1716. (code)

2 Marion, Pat, et al. "Director: A user interface designed for robot operation with shared autonomy." Journal of Field Robotics 34.2 (2017): 262-280. (code)

Code

We recommend the best way to get started is by using our Docker distribution

Alternatively, you can build from source using our GitHub repository

Documentation lives on our GitHub.

Data

You can now download the entire dataset here: full LabelFusion example dataset download, (500+ GB, will take a couple hours to download).

You can also download a smaller sample dataset here: 5 GB download, contains object meshes and two video logs with labels and poses.

Please visit the guide for next steps: Getting Started with LabelFusion Sample Data

Publication

LabelFusion is described in our publication (To Appear at ICRA 2018).

Pat Marion, Peter R. Florence, Lucas Manuelli, and Russ Tedrake "LabelFusion: A Pipeline for Generating Ground Truth Labels for Real RGBD Data of Cluttered Scenes." arXiv preprint arXiv:1707.04796, 2017. (pdf)

Some additional technical details are provided in our supplementary material:

Pat Marion, Peter R. Florence, Lucas Manuelli, and Russ Tedrake "Supplementary Material for LabelFusion: A Pipeline for Generating Ground Truth Labels for Real RGBD Data of Cluttered Scenes." (pdf)

People